Intro

Intro

Terraform uses API to interact with your Cloud provider’s platform. Hence many error messages thrown by your terraform deploy come directly from the cloud platform (i.e OCI services) . In some cases, they prove to be very unhelpful and empty of insights, leaving you wonder what really is breaking your deployment. These are part of what we call the API Errors. In my case, I spent weeks pulling my hair off to find what was really behind my API 404 error.

Therefor, I decided to summarize what was documented and what really happened.

Service API Errors

First, as It’s always better to lay down the basics, I would like to give a brief preview of API errors structure for OCI.

Service error messages returned by the OCI Terraform provider include the following information:

- Error - the HTTP status and API error codes

- Provider version - the version of the OCI Terraform provider used to make the request

- Service - the OCI service responding with the error

- Error message - details regarding the error returned by the service

- OPC request ID - the request ID

- Suggestion - suggested next steps

For example as shown in the official OCI documentation, the output is very similar than common REST API errors

Error: <http_code>-<api_error_code>

Provider version: <provider_version>, released on <release_date>. This provider is <n> updates behind to current.

Service: <service>

Error Message: <error_message>

OPC request ID: exampleuniqueID

Suggestion: <next_steps>

Commonly returned service errors.

This list is not exhaustive and include only the error message and suggestion

- "400-LimitExceeded" service limits exceeded for a resource

Error: 400-LimitExceededError Message: Fulfilling this request exceeds the Oracle-defined limit for this

tenancy for this resource type. Suggestion: Request a service limit increase for this resource <service>

Error: 500-InternalErrorError Message: Internal error occurred

Suggestion: Please contact support for help with service <service>

When are API credentials checked?

Terraform core workflow

Let’s review terraform core workflow and its actions for the sake of completeness.

Workflow commands:

init Prepare your working directory for other commands

validate Check whether the configuration (modules, attribute names, and value types) is valid

plan Show changes required by the current configuration

apply Create or update infrastructure

destroy Destroy previously-created infrastructureNow the thing is, although init loads the cloud provider plugin, none of the first three commands will verify the API credentials for you. I bluntly assumed otherwise, which was clumsy but it’s never been very explicit in the doc.

Bottom line:

terraform apply is the only step where API credentials are checked, but that’s not all...

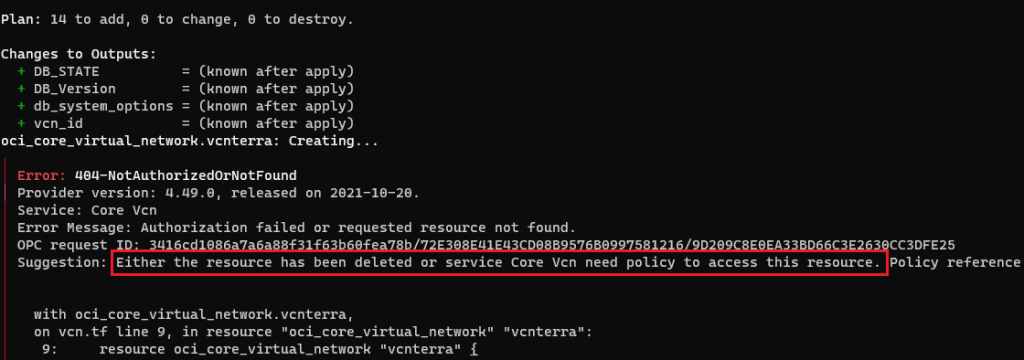

What the heck is 404-NotAuthorizedOrNotFound

What I see

As you can see, the suggestion was nowhere close to help me out with this conundrum.

My configuration ought to deploy a DB system stack & all previous commands (init,validate,plan) were successful.

What OCI API errors page says

I found this note: `` Verify the user account is part of a group with the appropriate permissions to perform the actions in the plan you are executing``

After struggling with it for several days, I then decided to put it on ice for few weeks.

The real Root cause

2 weeks later, I accidentally found where all this mess came from, and the problem was right under my nose this whole time. I just decided to check my terraform.tfvars and each of OCI authentication variables one by one.

That’s where I noticed that my credentials were mixed up between 2 tenancies

# Oracle Cloud Infrastructure Authentication tenancy_ocid = "ocid1.tenancy.oc1.." # TENANCY 1 user_ocid = "ocid1.user.oc1.." # USER FROM TENANCY 2 fingerprint = "1c:" # TENANCY 1 private_key_path = "~/oci_api_key.pem" # TENANCY 1 public_key_path = "~/oci_api_key_public.pem" # TENANCY 1 compartment_ocid = "ocid1.compartment.oc1." # COMPARTMENT 2

- I agree, that’s pretty screwed up, but this can happen when working with different tenancies from our workstation.

- Once the discrepancy corrected the terraform stack was deployed as expected since the plan was successfully run.

Conclusion

safely saved for each of your environments/repos ( Gitlab, OCI devops, terraform Cloud).

Thanks for reading

No comments:

Post a Comment